Getting Started with AWS

Published October 12, 2021

I'm always looking for ways to expand my skillset, and in an increasingly digital, work-from-home oriented world, having a firmer grasp on web technologies seems like not a bad thing. But, as someone who considers themselves a technically competent nerd with no real credentials to speak of, where to get started?

The Cloud Resume Challenge seems like a useful, self-guided way to start, or at least a roadmap toward building a 'complete' resume project. So here we go!

The Challenge: 16 Steps

Completion of the challenge requires ticking 14 boxes to set up a simple static website on AWS with a required subset of features, acquiring a AWS Certified Cloud Practitioner certification, and wrapping it all up with a blog post about your experiences. Starting at the beginning, as usual, here's the start of the blog posts!

The AWS certificate requires a $100 investment and a genuine exam worth studying for, it seems. Something I imagine would be significantly easier to start studying for after completing the legwork of the rest of the challenge. So we'll set that requirement aside for most of the following.

Why AWS?

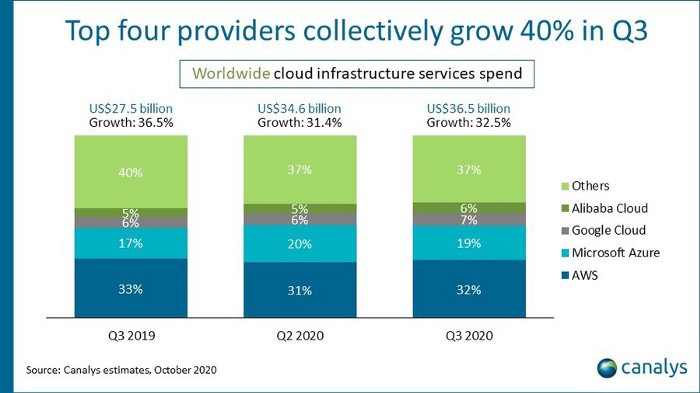

The Cloud Resume Challenge currently offers versions tailored to AWS, GCP, and Azure. I've chosen to go with AWS for the moment, but I'll admit, this is a fairly arbitrary choice based on my naïve impressions of the Amazon/GCP/Azure offerings. I'm sure I'll revise these opinions down the road, but my sense is:

- Amazon has a wider array of services offered, more industry reach, and a longer history of cloud presence. It seems to have a reputation for harder-to-parse naming and access conventions.

- GCP has a somewhat friendly access/account model, but less industry reach and a shorter real world knowledgebase.

- Microsoft Azure seems (again, from an outsider's perspective) to be targeted at existing businesses that are used to Microsoft's products and tools

Image from Veritis.com, Oct 2020

For a self-guided project, a deep well of online resources is really appealing. It doesn't look like I'll run into any significant limitations with any of the three platforms with this basic a project. With AWS seeming to be a market leader, it feels like I may as well go that way, and hopefully, what I learn will be semi-transferrable knowledge.

Setting up a Static Website on AWS S3

Getting Oriented

The cloud resume challenge page directs users to this page on AWS helpfully titled "Hosting a Static Website using Amazon S3." Neat!

Since this is a static website (i.e. made up only of static resources like HTML and CSS, possibly with some client-side Javascript but no server-side action), it seems that a static website on S3 is essentially a bunch of files plopped into a folder on Amazon's servers that people can point their web browsers at and view. Well, I'm sure a "bucket" is more complicated than just being a folder... but I'll cross that bridge when I need to.

Let's look through the AWS info documents listed on that page.

The Website Endpoints page indicates that, by making the contents of a bucket publicly accessible, anyone can point their browser at a fiddly URL like http://bucket-name.s3-website-Region.amazonaws.com and have access to that content. It goes on to talk a little about how to point ones DNS provider of choice at these specific resources, to use a more human-friendly URL. Or, one might use Amazon's Route 53 to.... accomplish the same thing? The distinction eludes me at this point, but that feels like something I can come back to.

The Enabling Website Hosting page seems to clarify a little more the steps needed to make a bucket available as website, saying: "When you configure a bucket as a static website, you must enable static website hosting, configure an index document, and set permissions." It seems any of these steps can be done in the online S3 console, using API requests, via Amazon's SDK via code, or using their command line interface. While I'm sure programmatic access to these tools is really useful if you're spinning up sites in an automated fashion, I imagine I'll mostly be using the S3 console.

A Placeholder Page

None of this seems terribly complicated - I need to put some files in a bucket, then configure some settings so Amazon knows where to point people when they access my (very fiddly) URL. So I'll start by creating a very basic placeholder page to upload and play with. Time to get my hands dirty and learn.

For most of my scripting work at my day job, I use VS Code, which I happen to know has an HTML Boilerplate extension. The very generic HTML it generates is:

So, we've got our basic HTML document, with a <head> tag to start off, a <body> with basically no content yet... and that's it. Plus some commenty-things that seem to be dealing with older versions of internet explorer.

There's also a lot in here I don't understand yet, especially in the <head> section. What is a <meta> tag? Why do I need a viewport? I'll add these to my list of questions to answer down the road. I'm just diving forward to get this thing online at this point.

I'll add a brief <p> tag to the above boilerplate with the text "Hello, world!" That's it. That's the whole website for now.

Making a Bucket

If this whole process is really as simple as allowing the public to see the files in one of my buckets, it feels like I'll need this file (now called index.html) to be in an S3 bucket. Let's figure out how to do that.

Searching for "S3" in the top search bar on AWS brings up a listing for the Simple Scalable Storage page...

...which has a create bucket button!

I think "Hello_World" would be a good name for this bucket... oh, except bucket names can only have lowercase letters, numbers, periods, and hyphens... and must be unique Alright, "hello-world-jg-cr-1" it is. I don't know what the advantages to the different regions are, but given that I'll likely be the only traffic to this site, I'll chosen US East (Ohio) us-east-2 since I'm in the US Midwest.

Aha! The next section talks about access settings. I recall from the earlier page that setting permissions to allow public access is something I'll need to do to allow the site to be publicly available. Seems I'm able to do that right now, though I do have to check a big scary box saying I understand the risks.

There are some further options here. Versioning, which seems like it will store every change to an object unless otherwise specified, which seems like overkill for this tiny bad website. Tags, which may be interesting later. Encryption, which, again, overkill. And object locking, to prevent the accidental deletion of objects, which would probably be more useful if I were adding/removing objects programmatically. I'll leave all those features as default for now.

Uploading

Hey, I've got a bucket! With nothing it, it, but it is indeed, as promised, a bucket. And it seems I can just upload my index.html file using the WebUI...

Ah, here's some things I'll definitely want to go deep on at some point: permissions and storage types. I know the different types of storage will cost differerent amounts and have different restrictions, but I'll leave this as the default "Standard" for now. There are a couple of pre-defined access control lists, but given that the "Setting Permissions for Website Access" page earlier has a specific setup recommended, I'm going to leave this predefined as Private for now and change things momentarily, I think.

Upload succeeded! Of course, trying to access that file online at the moment only yields an Access Denied error, but that was to be expected. I haven't completed all the required steps yet.

Static Website Hosting

Going back into the list of buckets, selecting my newly formed bucket, and clicking on its Properties tab, it's dead easy to enable the "Static Website Hosting" setting as recommended:

It seems this step also takes care of the "Configuring an Index Document" step I anticipated from above. I enter "index.html" as my index document, so AWS knows what file to serve up when users access the "root" of the bucket.

Permissions

Since I elected not to configure my permissions automatically before, I'll go back and do that now, following along with the Setting Permissions for Website Access page. This is essentially copying and pasting the pre-generated permissions text into the in-browser permissions editor window and hitting save.

Success

Now, if I go to

Alright! My first website hosted on AWS! So clean, so crisp, so minimalistic.

Next Steps

Very loosely speaking, this checks off one-and-a-half of the 16 points on the Cloud Resume Challenge checklist:

(4) Static Website - Your HTML resume should be deployed online as an Amazon S3 Static Website.

(16) Blog Post - Link a short blog post describing some of the things you learned. (Of course, this is not that blog post, but is a starting point and framework for them).

To my eye, the remaining 14 goals fall into 5 separate topics. Here's what I think each entails, and which bullet points on the Cloud Resume Challenge they tick off:

Site Content

As catchy as "Hello World" is, a resume it is not. This chunk involves making the website into meaningful content, formatted in a nice way. Possibly with basic, general HTML, possibly with some kind of interesting framework. But content should the primary focus of this chunk. I'm not sure what form that will take, to be honest, but something better than boilerplate would of course be necessary.

This covers goals:

(2) HTML - Your resume needs to be written in HTML

(3) CSS - Your resume needs to be styled with CSS

Basic AWS Infrastructure

A cumbersome URL sitting in a bucket is not the most user friendly interface, nor the most secure. I'll need to set up a proper DNS address for my new site, and use something like Cloudfront to serve the site over https. Since I'm already somewhat familiar with these processes outside of AWS, I hope this will be both educational and fairly straightforward.

This covers goals:

(5) HTTPS - The S3 Website URL should use HTTPs for security

(6) DNS - Point a custom DNS domain name to the site.

Site Features

There's a half-dozen goals in the Challenge that seem oriented toward exposing the participant to slightly more advanced website design. The result is a simple visitor counter, but it uses Javascript to query a database via an API and a Lambda written in python, that should be well tested and defined using a Serverless Application Model. I'm sure I'll find out what a SAM is along the way; I know what most of the other words are at least.

This covers goals:

(7) Javascript - Your resume webpage should include a visitor counter that displayed how many people have accessed the site.

(8) Database - The visitor counter will need to retrieve and update its count in a database somewhere.

(9) API - You will need to create an API that accepts requests from your web app and communicates with the database.

(10) Python - Write a bit of Python code in the Lambda function to achieve the API functionality.

(11) Tests - Include some tests for your Python code

(12) Infrastructure as Code - Define your database, API Gateway, and Lambda function in an AWS Serverless Application Model (SAM) template and deploy them

Source Management/Deployment

You're telling me that manually uploading index.html from my hard-drive to a bucket isn't website development best-practices? Rude.

This chunk actually involves creating two separate GitHub repositories: one to manage the actual content of the site; the other for the backend features developed above. Both will apparently be able to be automatically deployed to their respective parts of the AWS infrastructure, and to only do so if the corrects tests pass. Neat!

This covers goals:

(13) Source Control - Create a GitHub repository for your backend code.

(14) CI/CD Back End - Set up GitHub actions such that when you push an update to your Serverless Application Model template or Python code, your tests get run, and, if passing, the changes get packaged and pushed to AWS.

(15) CI/CD Front End - Create a second GitHub repository for your frontend code; configure Github actions so that when you push new code, your website gets updated.

Certification

To have formally completed the Cloud Resume challenge, one needs to finish the required AWS certification. I suspect this will be the final thing I complete, as its sort of separate from the process of actually constructing the site.

This covers goal:

(1) Certification - Your resume needs to have the AWS Cloud Practitioner Certification on it.

Onward

So where to go next? My impulse is to start with with the GitHub repositories, to make development easier and since I'm already fairly competent with git / GitHub as a workflow. Perhaps then I'll dig into HTTPS/DNS next, since hopefully those straightforward. That leaves what feels like the larger development fronts - actual content, and all the backend voodoo to implement the guest tracker. Which may actually be nice to bounce between, to keep the juices flowing when I stall on one. And those projects get far enough along their paths, it probably makes sense to start integrating the CI/CD pieces to make that process smoother.

That's the thought for now, but of course, no battle plan survives contact with the enemy. But it does all sound like fun.